Serverless Architecture using Amazon AWS

In this post, I'll dive into a serverless web app architecture serves as a learning project for me, while working with seasoned architects on improving my architecture skills and experienced Cloud Architects. As I continue my cloud computing training, I'm embracing the challenges, security vulnerabilities, and impractical service choices that come with the journey. This evolving cloud experience is both exciting and educational. So, let’s explore my experimental cloud architecture for an application, utilizing a serverless infrastructure.

Let’s start with the Business NEED

Business Problem: A startup company is launching a new e-commerce platform that needs to scale efficiently to handle variable traffic loads while ensuring low latency and high availability. The primary focus is on quickly getting to market with a cost-effective, serverless solution that can grow with user demand.

Key Requirements:

High availability

Low latency

Cost-effective

Scalable infrastructure

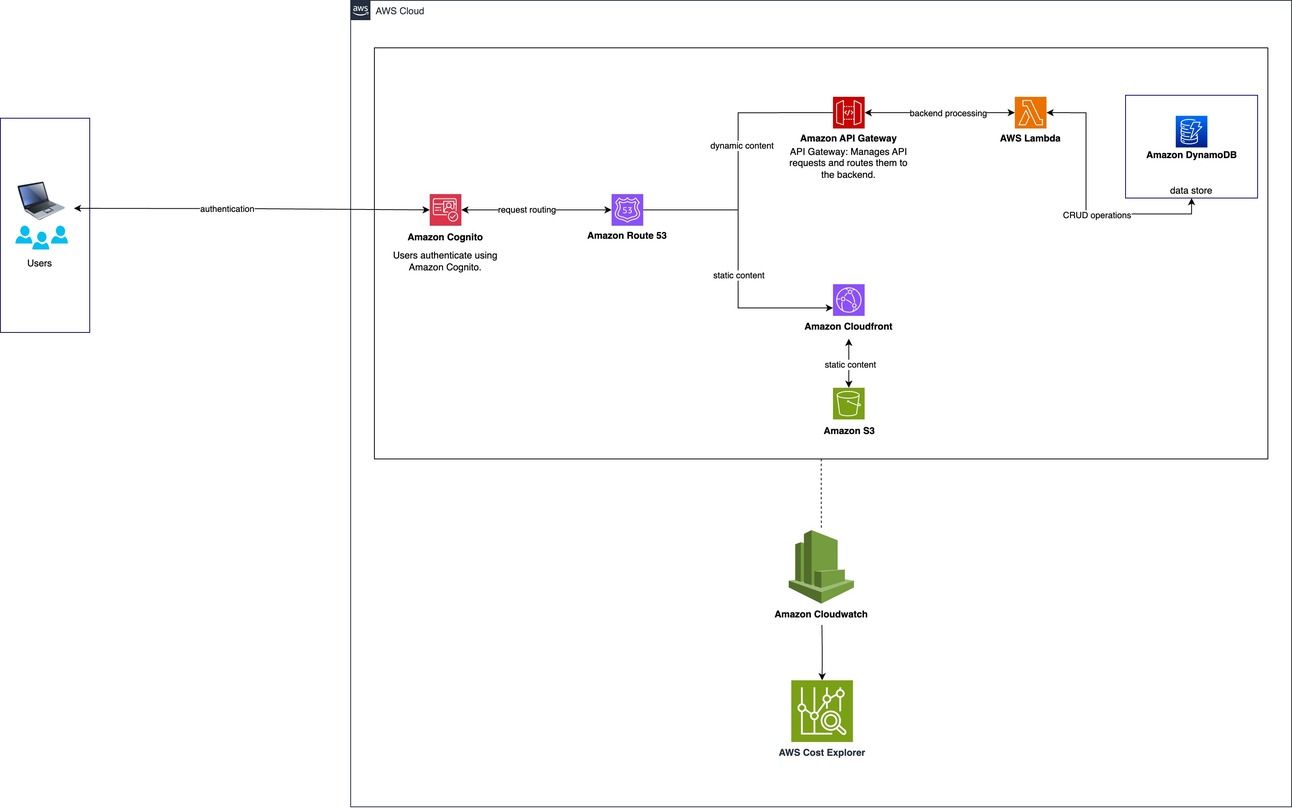

Proposed Architecture:

Users interact with the application through Amazon Cognito for authentication.

Requests are routed using Amazon Route 53.

Static content is delivered through Amazon CloudFront and stored in Amazon S3.

Dynamic content is handled by Amazon API Gateway, AWS Lambda for backend processing, and Amazon DynamoDB for data storage.

Monitoring and cost management are handled by AWS CloudWatch and AWS Cost Explorer.

Why Serverless?

Serverless computing eliminates server management, auto-scales with demand, and charges only for usage, enhancing cost-efficiency, deployment speed, and focus on development tasks. When on a server, these costs can skyrocket, especially during peak times of web traffic to a companies online platform (app, site).

You see, going serverless is like a football team having no fixed positions: flexible plays, instant substitutions, and no stadium maintenance. Focus on winning games, not the field. “Well wouldn’t that “change the game?” It sure will, but for our Customers benefit.

Key metrics to measure “serverless” success

I am building my portfolio in Cloud computing, so it is crucial for me to further education on implementing metrics that measures the impact and success of serverless migrations. Below are some key areas I believe, a company should consider to establish clear, relevant, and achievable metrics, beginning with User Experience, as usability is my first love, lol.

User Experience Metrics:

User Satisfaction: Gather feedback through surveys and user reviews.

Usability Testing: Conduct regular usability tests to ensure the platform meets user needs.

Engagement Rates: Track user interaction with the platform, such as session duration and page views.

Performance Metrics:

Load Time: Monitor the speed at which pages load.

System Uptime: Ensure high availability and minimal downtime.

Error Rates: Track and reduce the number of errors or crashes.

Business Metrics:

Sales Growth: Measure increases in sales or conversions directly attributable to the new system.

Customer Retention: Track repeat usage and retention rates.

Cost Efficiency: Evaluate cost savings from improved processes or reduced overhead.

Development Metrics:

Deployment Frequency: How often new features or updates are deployed.

Lead Time for Changes: Time taken from a feature request to deployment.

Defect Rates: Measure the number of defects found during and after development.

I am yet training in helping companies achieve such architecture, but I believe by focusing on these areas, I can help companies create a comprehensive picture of success and continuously refine their approach to improve results of their serverless implementation.

Lets get the to the Architecture, v1

Onward to the diagramming.

Overall Architecture - Serverless Architecture using Amazon AWS - Part 1 - without security layer and network layer

After backward planning with AWS whitepapers, I know my diagram could improve by adding more detailed data flow representations. If you take a look at the AWS documentation on Microservices architecture, you'll see that my diagram doesn't quite capture the flow of information as well. So, by adding some extra details, I can make the diagram more comprehensive and useful.

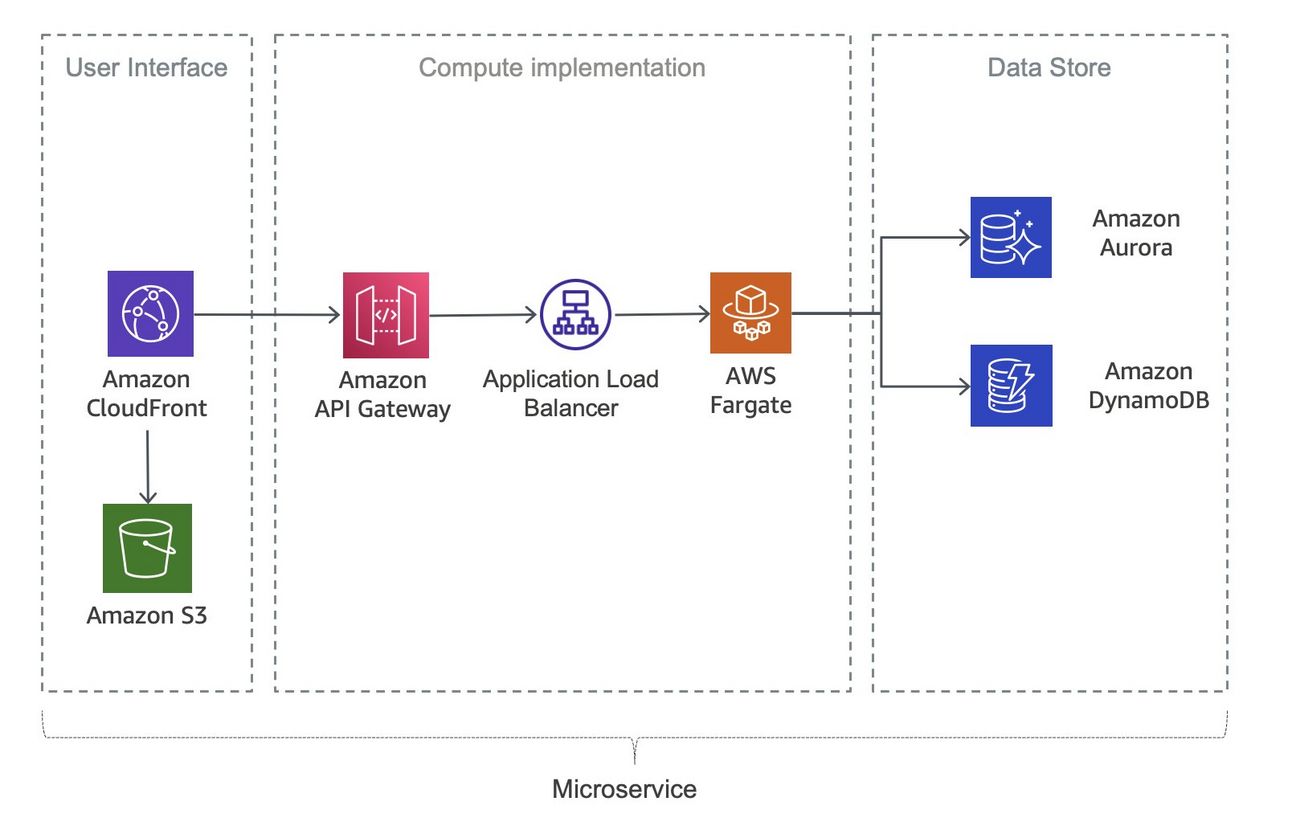

Here is how AWS illustrates a serverless implementation using containers with AWS Fargate. While I am not using microservices, it is clear that this tiered approach is far more detailed and simple:

Figure shown: AWS Serverless microservice using AWS Fargate

Diagramming aside I also wanted to understanding metrics and the business impacts, which puts the customer needs first, I wanted to understand how to effectively utilize serverless components that gets my clients to a more scalable experience. There are several key considerations, I have learned from reading and taking the QCC training on AWS, that this architecture should house:

DATA

Data Security: Ensure all data at rest and in transit is encrypted. Use AWS IAM roles and policies to manage permissions.

LATENCY

Latency: Utilize AWS edge locations with CloudFront to minimize latency for static content.

AGILITY AND SCALABILITY

Scalability: Leverage AWS Lambda for automatic scaling to handle varying loads without the need for manual intervention.

COSTING

Cost Management: My professor expounds upon the importance of aving companies expenses while producing the best possible solutions for our clients. In order to do that, I have to monitor and optimize costs using AWS CloudWatch and AWS Cost Explorer. However, in the first swing at this architecture.

Amazon Cognito:

Manages user authentication.

Correctly connected to Users with a bi-directional arrow labeled "authentication."

Amazon Route 53:

Manages DNS request routing.

Connected to Amazon Cognito and correctly shows routing decisions to static and dynamic content.

Two arrows are used: one labeled "static content" to CloudFront, and another labeled "dynamic content" to API Gateway.

Amazon CloudFront:

Delivers static content.

Connected to Route 53 for static content and retrieves static content from S3.

Amazon S3:

Stores static content.

Correctly connected to CloudFront with an arrow labeled "static content."

Amazon API Gateway:

Manages API requests.

Connected to Route 53 for dynamic content.

AWS Lambda:

Executes backend processing.

Correctly connected to API Gateway with an arrow labeled "backend processing."

Amazon DynamoDB:

Stores dynamic data.

Connected to AWS Lambda for CRUD operations.

Other layers are needed though, for V2 such as:

Networking Details (Availability Zones)

Security Details (such as WAF and IAM)

Data details

AI layer, if any

Multiple Diagrams or ONE LARGE Diagram?

When diagramming Enterprise user flows and experiences, I usually provide an overall purview that usually encompasses data flows as well, in case there are data and technical stakeholders. However, I am learning that Cloud Architecture is much different. I am learning that it is generally not considered best practice to show every single detail in a single architecture diagram. Instead, the most effective approach is to create multiple layers or separate diagrams, each focusing on specific aspects of the architecture. I am also learning about “layered” diagrams. For interactive and layered diagrams, I will consider using tools such as Draw.io, Figma.com, AWS Perspective or Cloudcraft.

Layered means seperate diagrams (most times)

These “Layered” diagrams are per audience, that is, network diagrams for the Networking Team, Security Diagrams for the Security Team, Data diagram for the Data Team. I am also learning that keeping diagrams separate are great for clarity, to ensure the diagrams are part of a unified documentation set where references and links between diagrams are clearly stated.

Reasons for Layered Diagrams:

Clarity: Separate diagrams for different aspects (network, security, data flow, etc.) make it easier to understand each component without overwhelming the viewer.

Audience: Different stakeholders (developers, security teams, network engineers, management) need different levels of detail and focus.

Maintainability: Updating and maintaining diagrams is easier when they are segmented into focused areas.

Focus on Key Areas: Each diagram can highlight critical areas specific to its focus, ensuring that important details are not lost.

Best Practices for Creating Layered Diagrams:

Again, I am still training in this, but the architecture above, riddled with not so great decisions which I am improving, would be considered a high-level overview of how our Startup can implement a serverless solution for their infrastructure. Per the veterans in this business, whom I have honor and very high respect for, this is some of the best practices for creating “layered” diagrams.

To note, I thought I submit one large diagram full of encompassing nodes and connections to every single tier at a highly detailed level.

I stand corrected, gladly I might add, for sure. On to the best practices, and do email me by clicking here to add your own. I am always looking to train and learn from those who are willing to teach:

High-Level Overview: Start with an overview diagram that provides a general understanding of the entire architecture. This should be less detailed and more conceptual.

Contents: Major services, basic data flow, key components, and high-level interactions.

Audience: Executives, project managers, clients, and non-technical stakeholders.

Network Architecture: Create a detailed network diagram showing VPC configurations, subnets (public/private), routing tables, and internet gateways.

Contents: VPCs, subnets, NAT gateways, internet gateways, routing tables, and security groups.

Audience: Network engineers, system administrators.

Security Architecture: Separate diagram focusing on security aspects such as IAM roles, policies, security groups, encryption methods, and compliance services.

Contents: IAM roles, policies, security groups, KMS keys, encryption methods, and monitoring services like AWS Config, GuardDuty, and CloudTrail.

Audience: Security teams, compliance officers.

Data Flow Diagram: Illustrate how data moves through the system, including data sources, processing steps, storage locations, and data sinks.

Contents: Data sources, ETL processes, data stores, data consumers, and backup/recovery strategies.

Audience: Data engineers, data architects.

Application Architecture: Focus on the logical flow of the application, detailing microservices, databases, message queues, and interaction points.

Contents: Microservices, API gateways, message queues, databases, and service interactions.

Audience: Developers, software architects.

Cost Management: Diagrams or tables that detail the cost aspects, showing where major expenses are and potential cost-saving measures.

Contents: Service usage, potential cost-saving opportunities, and cost management tools like AWS Cost Explorer.

Audience: Financial officers, budget managers.

This is not too much different from the UX protocol. We meet with several groups and pending the audience, provide claritive documentation or prototype that clearly outlines the problem, the solutions, costs, research and any edge cases (similar to disaster recovery plans in cloud).

Many lessons learned in this architecture and I also have yet to build it. Some say Architects do not build, however, building is how I best learn things.

Part 2: More Security and Additional Business considerations (coming soon in next post)

In part 2 of this serverless solution, the Startup company now faces new challenges, including increased security threats, compliance with data protection regulations, and the need for more detailed monitoring and analytics.

To assist with diagramming this flow, I will be using my class notes from QCC, the Well Architected Framework from Amazon, reference architectures and using my “logical order” prompt technique to help connect the detailed networking, security and data diagrams.

Logical Order Prompting Technique? What is that?

Using Logical Order Prompting in LLM Prompts for AWS Architecture

A key prompting strategy I discovered while working on the Forage project for Email Security, involves asking the Large Language Model (LLM) to create architectures in a logical order.

This technique involves asking an LLM to create output (results, answers, observations, reports, objects) in a logical sequence, similar to ordering at a restaurant:

Select topic (main order)

Provide specific details (menu items)

Request step-by-step instructions (sides)

Give context/examples (coupons)

This prompting technique helps me form key personas for my thread, leading to more nuanced answers and a very clear output that I can follow, step by step. My order is mostly correct and I still can become creative with even more top-p, or, ingredients if I so choose.

Simply put, this approach helps create detailed, structured outputs that can be integrated into workflow and translated into code, plaintext flows, even csv blocks.

Thats about it for part 1 of this Serverless journey. Please stay tuned for Part 2, where I will be redesigning this structure. You have feedback and I am here to learn from you, so book a session with me by clicking here. I would love to talk some Enterprise, Data, Security, Cloud and UX with you.

Part 2 on the come up, getting it popping soon. Speak soon friends 💪

-Jonny VPC